The humble robots.txt file has long been viewed as a simple traffic controller for search engines. But in today's rapidly evolving digital landscape, it can be transformed into a powerful security asset that protects your website and brand. Let me share how this transformation happens and why it matters for your digital presence.

The Hidden Power of Simple Tools

When I first encountered robots.txt while building identity management platform, I saw it as most developers do – a basic text file telling search engines where they could and couldn't go. But one incident changed my perspective entirely. Our servers suddenly started getting hammered with requests, causing significant performance issues. The culprit? A poorly configured robots.txt file that was allowing crawlers to repeatedly hit our API endpoints. This experience taught me a valuable lesson: even the simplest tools can have profound security implications.

Building Smart Boundaries

Think of robots.txt not as a simple fence, but as an intelligent boundary system. Here's how to transform it from a basic crawler directive into a sophisticated security tool:

1. Smart Directory Protection

Start with a security-first configuration:

User-agent: *Disallow: /api/Disallow: /admin/Disallow: /internal/Disallow: /backups/Allow: /public/Crawl-delay: 5Each line serves a specific security purpose:

- Protecting sensitive endpoints from unnecessary exposure

- Preventing information leakage about internal structures

- Controlling the rate of access to preserve server resources

2. Creating Digital Tripwires

One of the most powerful security features of robots.txt is its ability to act as an early warning system. By creating specific entries that shouldn't be accessed, you can detect potential security threats before they become problems.

For example, set up monitoring for attempts to access non-existent but sensitive-looking paths:

User-agent: *Disallow: /backup-database/Disallow: /wp-admin/Disallow: /admin-panel/When someone tries to access these honeypot directories, it's often an indication of malicious intent. Your security systems can flag these attempts for further investigation.

Integrating with Modern Security Systems

The real power of robots.txt emerges when it's integrated with your broader security infrastructure. Here's how to create a comprehensive security system:

1. Web Application Firewall (WAF) Integration

Configure your WAF to monitor robots.txt compliance:

- Track user agents that violate robots.txt directives

- Implement progressive rate limiting for repeat offenders

- Create custom rules based on robots.txt interaction patterns

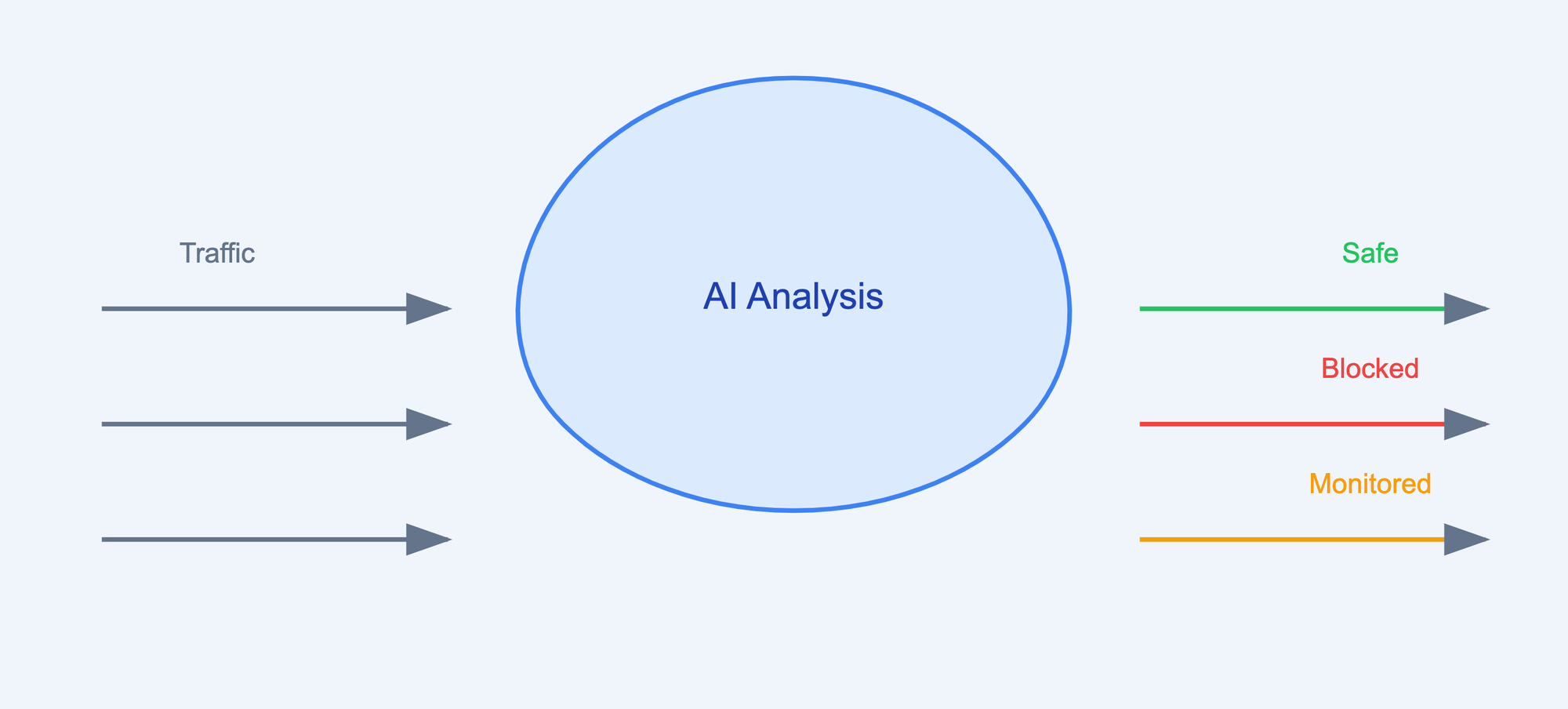

2. AI-Powered Threat Detection

Modern security goes beyond static rules. By implementing AI-powered analysis, your robots.txt can become part of a predictive defense system that:

- Identifies patterns in crawler behavior

- Predicts potential security threats

- Automatically adjusts security responses

3. Brand Protection Through Intelligence

Your robots.txt file can also serve as a brand protection tool by:

- Preventing unauthorized content scraping

- Protecting digital assets from misuse

- Maintaining control over how your content is accessed and used

The Future of Web Security

As we look ahead, robots.txt will play an increasingly important role in web security. Here's what's on the horizon:

1. Dynamic Defense Systems

Future implementations will include:

- Real-time rule updates based on threat intelligence

- Adaptive rate limiting based on server load

- Automatic response to emerging security threats

2. AI-Enhanced Protection

The next generation of robots.txt will leverage AI to:

- Predict and prevent sophisticated attacks

- Automatically adjust security parameters

- Integrate with advanced security analytics

Practical Implementation Steps

To implement these advanced security features:

- Audit your current robots.txt configuration

- Identify sensitive areas requiring protection

- Implement monitoring and logging

- Set up integration with security tools

- Configure automated responses

- Regular testing and updates

Measuring Success

Track these metrics to ensure effectiveness:

- Reduction in unauthorized access attempts

- Server resource optimization

- Decreased security incidents

- Improved crawler behavior compliance

Conclusion

The evolution of robots.txt from a simple crawler control tool to a sophisticated security instrument represents the changing nature of web security. By implementing these strategies, you can transform this basic file into a powerful component of your security architecture.

Remember, effective security isn't about having the most complex solutions – it's about using available tools intelligently and strategically. Start with these basic implementations and gradually build up your security posture based on your specific needs and threats.

https://ift.tt/LVaseYn

https://ift.tt/G1wFW8z

https://guptadeepak.com/content/images/2024/12/Robots.txt---simple-file-to-AI-powered-web-security.png

https://guptadeepak.weebly.com/deepak-gupta/robotstxt-from-basic-crawler-control-to-ai-powered-security-shield

No comments:

Post a Comment