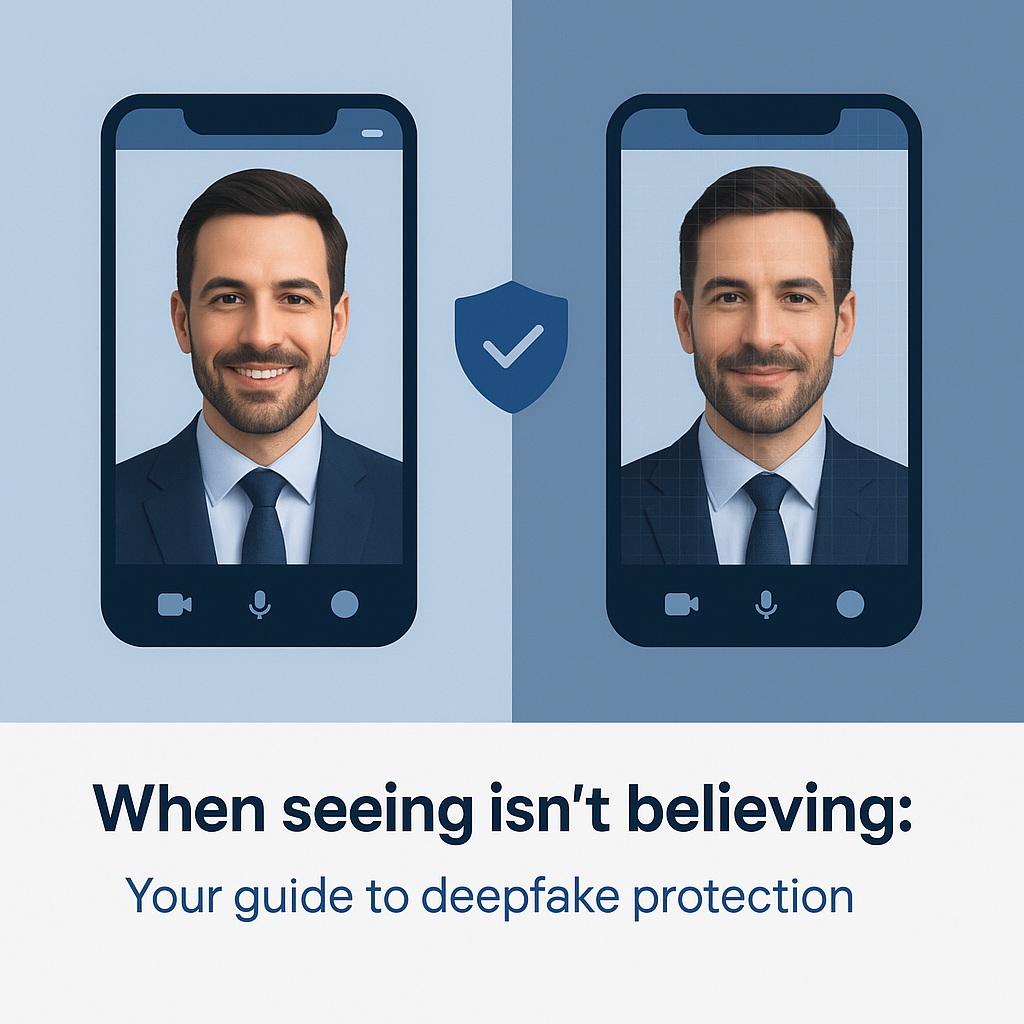

Have you ever received a phone call that sounded exactly like your boss asking you to buy gift cards for an urgent client meeting? Or seen a video of a celebrity saying something outrageous that seemed completely out of character? These might be examples of deepfakes – a technology that's becoming increasingly common in our digital world.

As our lives become more connected, understanding deepfakes isn't just for tech experts anymore. This article breaks down what deepfakes are, how they work, and most importantly, how you can protect yourself from becoming a victim of this digital deception.

What Is a Deepfake?

A deepfake is digital content (video, audio, or images) that has been created or altered using artificial intelligence to look and sound like someone else. Think of it as a high-tech impersonation. While traditional photo or video editing requires manual work and often leaves obvious signs of manipulation, deepfakes use AI to create forgeries that can be nearly impossible to detect with the naked eye.

The term "deepfake" combines "deep learning" (a type of AI) and "fake," highlighting how these sophisticated computer systems can create convincing impersonations of real people saying or doing things they never actually did.

How Do Deepfakes Work?

Imagine teaching a computer to play a very convincing game of copycat. That's essentially what happens with deepfakes.

Here's a simple breakdown of the process:

- Collection: The AI system is fed lots of images or recordings of the target person (the person being impersonated).

- Learning: The AI studies these samples to understand what the person looks like from different angles, how their face moves when they speak, or how their voice sounds across different words and emotions.

- Creation: Once the AI has learned these patterns, it can generate new content that mimics the target person's appearance or voice.

- Placement: The fake content is then placed onto another video or audio recording, making it appear as if the target person is saying or doing something they never did.

For example, a deepfake video might take existing footage of a celebrity and replace their face with someone else's, or make them appear to say words they never actually spoke.

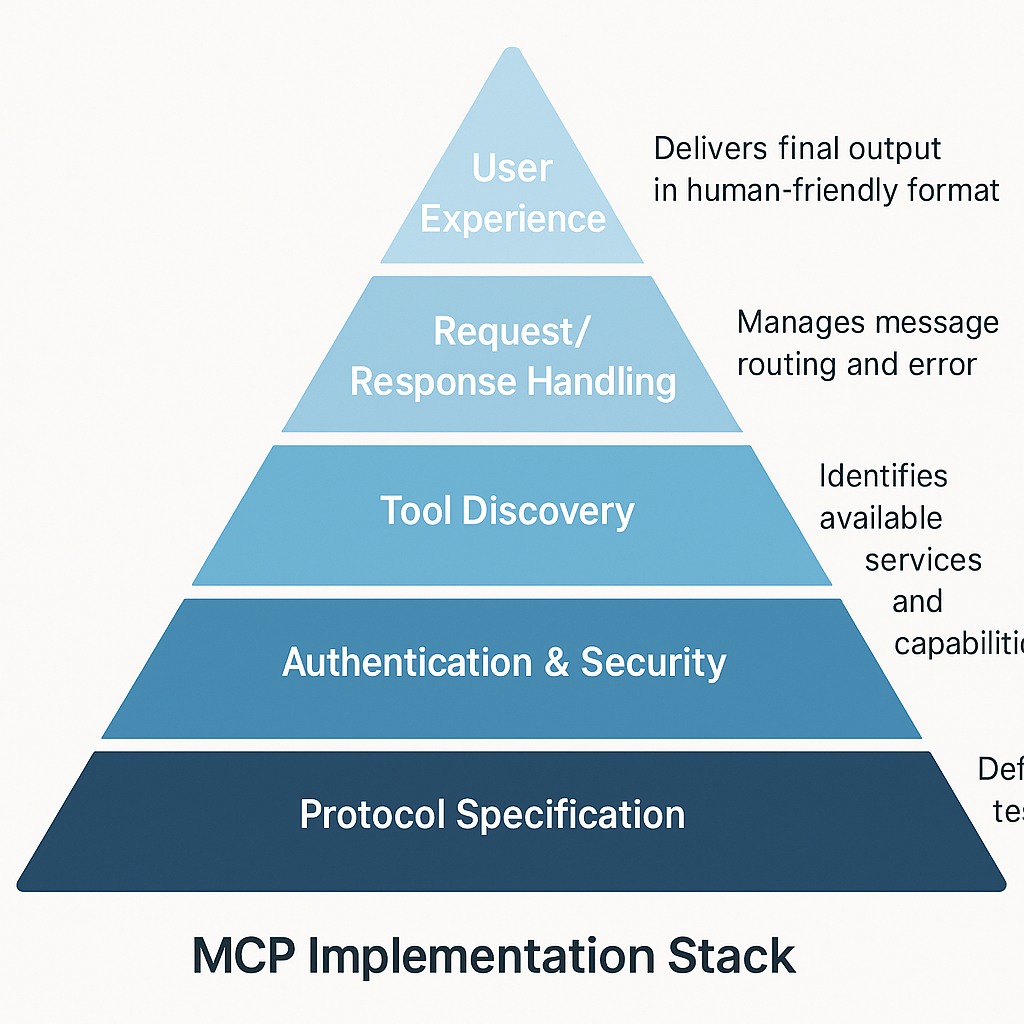

The Technology Behind Deepfakes

While we don't need to get too technical, understanding the basic technology helps appreciate why deepfakes are so powerful.

Two Main AI Approaches

1. GANs (Generative Adversarial Networks)

Imagine two AI systems competing against each other:

- One system (the "generator") tries to create fake images or videos

- The other system (the "discriminator") tries to spot the fakes

- They keep competing until the generator becomes so good that even the discriminator can't tell what's real anymore

This is like having a master art forger and a detective constantly challenging each other, with the forger becoming better and better at creating convincing fakes.

2. Autoencoders

These systems learn to:

- Compress images (like facial features) into simpler forms

- Then rebuild them with specific changes

- For deepfakes, they learn to swap one person's face onto another person's body while maintaining natural movement

What Makes Today's Deepfakes Concerning

Modern deepfake technology has advanced rapidly:

- Minimal material needed: Today's systems can create a convincing voice clone with just a few minutes of recorded speech, or face replica with just several good photos.

- Accessibility: What once required expensive equipment and technical expertise can now be done with free apps and minimal technical knowledge.

- Quality improvements: Early deepfakes had obvious flaws like unnatural blinking or poor lip syncing. These issues have largely been overcome in sophisticated deepfakes.

How Deepfakes Disrupt Everyday Life

Deepfakes aren't just a concern for celebrities or politicians. They can affect ordinary people in several ways:

Personal Impacts

Financial fraud: Imagine getting a video call that looks and sounds exactly like your sibling asking for emergency money. This type of "family emergency scam" has already happened using voice deepfakes, with people losing thousands of dollars.

Relationship damage: Deepfakes can create convincing evidence of someone saying hurtful things or appearing in compromising situations, potentially damaging personal and professional relationships.

Identity theft: Your face or voice could be used to access accounts or services that use biometric verification.

Broader Social Impacts

Trust erosion: As deepfakes become more common, we may start questioning everything we see and hear online. This "reality skepticism" can make it hard to know what to believe.

Information confusion: During important events like elections, deepfakes could spread misinformation quickly, making it difficult for people to make informed decisions.

The "liar's dividend": When real recordings can be dismissed as "probably deepfakes," people can more easily deny things they actually did or said.

Real-World Examples

These aren't hypothetical scenarios:

- In 2019, criminals used AI voice technology to impersonate a CEO's voice, convincing a manager to transfer €220,000 ($243,000) to a fraudulent account.

- In the UK, an art gallery owner lost her business after scammers created deepfake video calls impersonating a famous actor interested in hosting an exhibition.

- In 2023, a small business employee was tricked by a scammer who cloned the owner's voice over the phone, resulting in financial losses.

How to Protect Yourself from Deepfakes

While the technology is evolving quickly, there are practical steps you can take to reduce your risk:

For Spotting Potential Deepfakes

Watch for visual clues:

- Blurring or changes in skin tone around the edges of the face

- Unnatural eye movements or blinking patterns

- Unusual facial expressions or head positions

- Lighting that doesn't match the rest of the scene

Listen for audio oddities:

- Robotic or unnatural speech rhythm

- Breathing patterns that sound mechanical

- Background noise that suddenly changes

- Voice tone that doesn't match the emotion being expressed

Question the context:

- Does the message seem out of character?

- Is there an unusual urgency to take action?

- Is the person asking for something they've never asked for before?

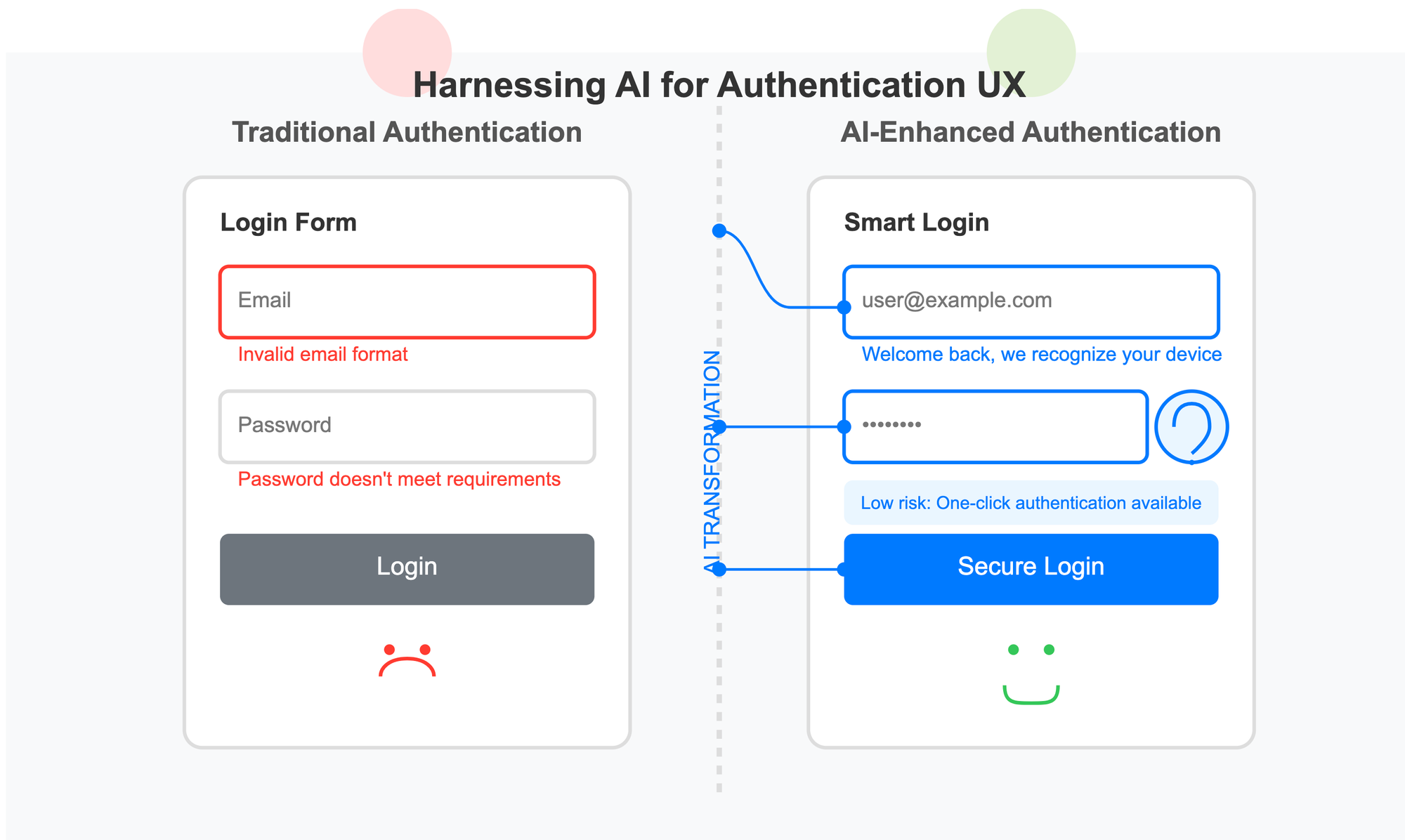

For Protecting Yourself

Verify through another channel: If you receive a surprising request via phone, video, or email, contact that person through a different method you know is legitimate. For example, if your "boss" calls asking for an urgent money transfer, hang up and call their regular office number.

Establish verification codes: With family members and close colleagues, consider setting up personal verification questions or code words for sensitive requests.

Limit your digital footprint: The less material available online (photos, videos, voice recordings), the harder it is for someone to create a deepfake of you.

Use strong authentication: Enable two-factor authentication on important accounts, preferably using an authenticator app rather than SMS.

Be skeptical of urgent requests: Scammers often create a false sense of urgency to prevent you from thinking critically. Take a moment to verify surprising requests, especially those involving money or sensitive information.

For Businesses

Create verification protocols: Establish clear procedures for authorizing financial transactions or data access, especially for requests that seem unusual.

Train your team: Make sure employees understand what deepfakes are and how to identify potential red flags.

Implement callback procedures: For sensitive requests, require staff to verify through established phone numbers, not the number that contacted them.

The Future of Deepfake Protection

As deepfake technology evolves, so too will our defenses:

Watermarking: Content creators can embed digital watermarks in legitimate media that reveal tampering.

AI detection tools: New technologies are being developed specifically to identify deepfakes, though they're in a constant race with deepfake creation tools.

Digital signatures: Future systems may include ways to verify that content was created by a specific person or device.

Media literacy education: As a society, we need to develop better skills for evaluating digital content critically.

Conclusion: Staying Safe in a World of Digital Deception

Deepfakes represent a new reality where seeing and hearing can no longer automatically mean believing. While this might seem concerning, remember that people have adapted to new communications technologies throughout history.

The best protection combines technology with good old-fashioned skepticism:

- Be aware that deepfakes exist

- Verify unexpected or unusual requests

- Trust your instincts when something feels off

- Use multiple channels to confirm important information

By understanding the basics of deepfakes and following these simple precautions, you can significantly reduce your risk of becoming a victim while navigating our increasingly digital world with confidence.

Remember: in a world where seeing isn't always believing, verification is your best defense.

https://bit.ly/3YKajwn

https://bit.ly/4dk6lAH

https://guptadeepak.com/content/images/2025/05/Deepfake-101---Understanding-Digitial-Detection-in-AI-World.png

https://guptadeepak.weebly.com/deepak-gupta/deepfake-101-understanding-digital-deception-in-todays-world